If it's online, Skuuudle can scrape it.

Skuuudle provides hundreds of billions of eCommerce data points every year from websites our clients nominate, no matter how complex the site, no matter how sophisticated the anti-scraping and CAPTCHA technology.

Our data outputs and transfer methods are completely flexible to our clients’ requirements, making integration with existing data pipelines easy.

Skuuudle combines technology 15 years in the making with the expertise of our human quality team to deliver data at unprecedented scale and accuracy.

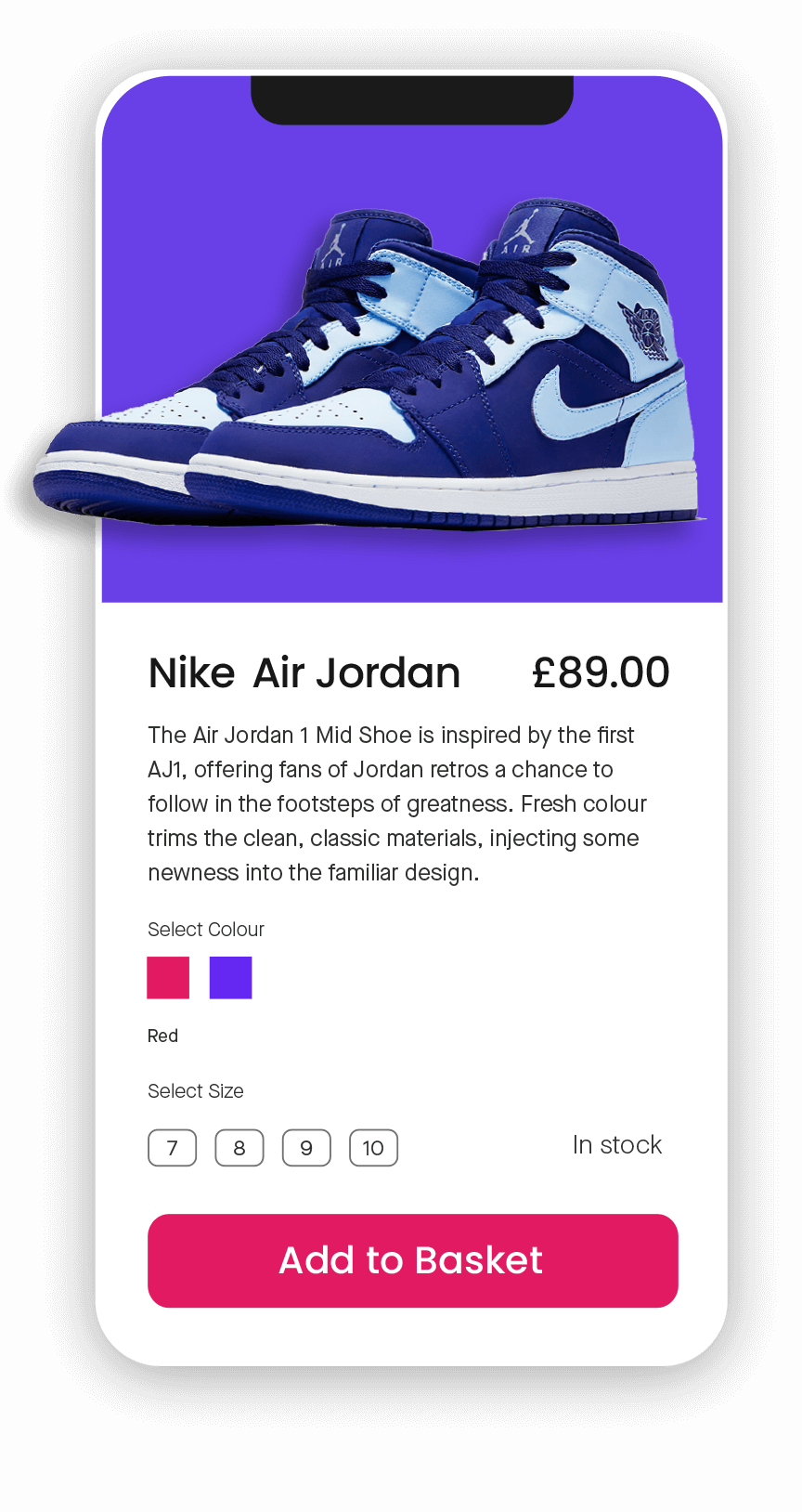

Brand name

We can find and store competing brands for the same or similar products.

Attributes

Track and compare a product's colour, size, functions, and features.

Product name

We collect the exact product name and match it with your product.

Price & promotion

We have the most accurate pricing data in the industry and can track discounts.

Product description

Your competitor's marketing tricks revealed. Supercharge your product description and gain an advantage.

Availability

Skuuudle can track product availability and store historic availability data.

Don't regret choosing a cheap, low-quality scraping solution.

Price Scraping FAQs

When you need to track your competitors’ prices from online e-commerce websites and online shopping websites, Skuuudle’s software automates this process for you.

Scraping is facilitated using Skuuudle’s well-established automated software that collects prices, descriptions, pack sizes, product codes, product names and even currency and language, from requested websites.

- Businesses take advantage of price scraping technology to automatically collect pricing data from competitors.

- Retailers, manufacturers and brands use price scraping to gain valuable insights into their competitors pricing, optimising pricing strategies to maximise profits.

- Identify pricing discrepancies between different markets and channels

- Recognise pricing trends and pricing opportunities, so you can adjust prices to maximise your market position

- Identify opportunities for price savings, promotions and discounts.

- Make better informed pricing decisions.

- Stay competitive and maximise profits.

Choose a reliable provider: Accurate price scraping providers double-check data you receive for quality assurance so you can make better pricing decisions.

Quality doesn’t come cheap: Consistently delivering high quality price scraping is hard and expensive, so don’t be duped into buying a cheap solution.

Brands and own-label products: If your assortment includes your own products, make sure any provider can price scrape and product match your own-label products.

eCommerce Web Scraping

We are the pricing intelligence vendor of choice for large enterprise.